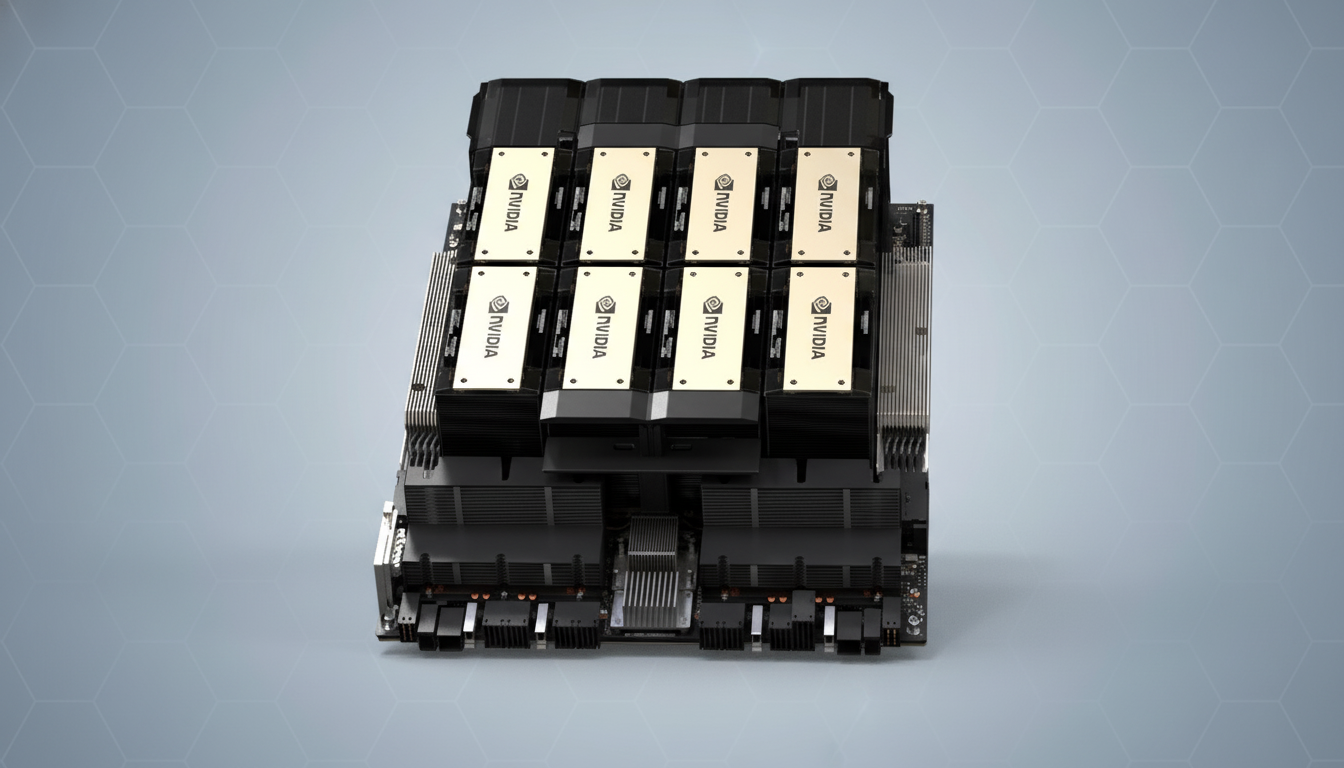

Nvidia is reportedly considering ramping up production of its H200 data center GPUs, as demand from Chinese tech giants surges. According to sources familiar with the matter, companies in China are placing substantial orders, signaling a significant shift in strategy following Nvidia’s recent announcement regarding licensed H200 sales in the country. This development marks a critical moment for Nvidia, especially given the importance of China in the global market for artificial intelligence (AI) technology.

The H200 chip represents a significant advancement in Nvidia’s Hopper architecture. With up to 141GB of HBM3e memory and approximately 4.8TB/s of memory bandwidth, the H200 is designed to enhance the training and deployment of large language models. Internal benchmarks suggest that the H200 outperforms its predecessor, the H100, particularly in terms of inference throughput. These capabilities are particularly appealing to Chinese companies, such as Alibaba and ByteDance, which are exploring large-scale purchases of H200 units in order to optimize their AI applications.

The backdrop to this development is a recent change in U.S. export controls. Previously, the H200 was unavailable to Chinese companies due to stringent regulations affecting advanced accelerators. However, the U.S. Commerce Department has introduced a framework that potentially allows for the licensed sale of H200 GPUs to China. While the exact details of this framework remain unclear, it has opened the door for Nvidia to tap into a lucrative market that previously posed significant barriers.

Nvidia has emphasized that it will carefully manage supply to ensure that licensed shipments to China do not interfere with availability for U.S. customers. This approach is crucial as Nvidia holds over 80% of the AI accelerator market, according to an April analysis by Omdia. Any shifts in regional demand could have far-reaching implications for the company’s supply chain and for hyperscale cloud providers seeking to meet their growing needs.

Despite the potential for increased production, Nvidia faces challenges related to packaging capacity. The H200 employs TSMC’s CoWoS technology, which has been a bottleneck in GPU manufacturing for the last two generations. Although TSMC is increasing its CoWoS output, the strong demand from global cloud providers creates a complex balancing act for Nvidia. Ensuring that sufficient substrate and HBM3e supply is available will be critical for scaling up H200 production in China.

Chinese companies are looking to leverage the H200’s capabilities for developing multilingual foundation models and video generation systems, among other applications. The increased memory and bandwidth of the H200 make it particularly suitable for large-context language models and other demanding AI workloads. Should import approvals be granted, these Chinese firms may quickly transition from interim solutions like the H20 to the more powerful H200, potentially accelerating their AI service quality.

However, Nvidia’s position is not without competition. Domestic alternatives, such as Huawei’s Ascend line, are gaining traction in the Chinese market, alongside several startups developing training-class accelerators. Although Nvidia’s performance and software ecosystem are superior for many high-end workloads, the company must remain vigilant about the evolving competitive landscape. Analysts estimate that China has accounted for approximately 20-25% of Nvidia’s data center revenue before the tightening of export rules, making it a strategically vital market.

As Nvidia navigates these complexities, a moderated ramp-up for H200 shipments in China could unlock additional revenue while reinforcing its competitive advantages, including its CUDA ecosystem. Nonetheless, the company faces inherent risks from regulatory uncertainties, which could impact licensing agreements. Navigating these challenges while increasing supply without compromising the availability for other customers will be essential for Nvidia moving forward.

Moving ahead, several indicators will be crucial to watch, including formal import clearances, TSMC’s adjustments in CoWoS allocations, and purchasing commitments from leading Chinese cloud providers. The convergence of these elements could lead to rapid advancements in the deployment of H200 clusters for AI training and inference, intensifying competition with domestic alternatives and reshaping the near-term market landscape for advanced GPUs.